遇到个 i40e 驱动丢包问题:在挂载 native 模式的 XDP 程序的情况下,如果网卡发生 toggle,XDP 程序 XDP_TX 的包就无法发出去,同时 tx_busy 计数不断增大。

经过一番排查,推断是网卡固件问题,现象是没更新 DMA 内存区域里的 head 字段。

在排查过程中,bpfsnoop 起了不可忽视的作用,特别是其 --filter-arg 和 --output-arg 的能力。

i40e 工作原理简介

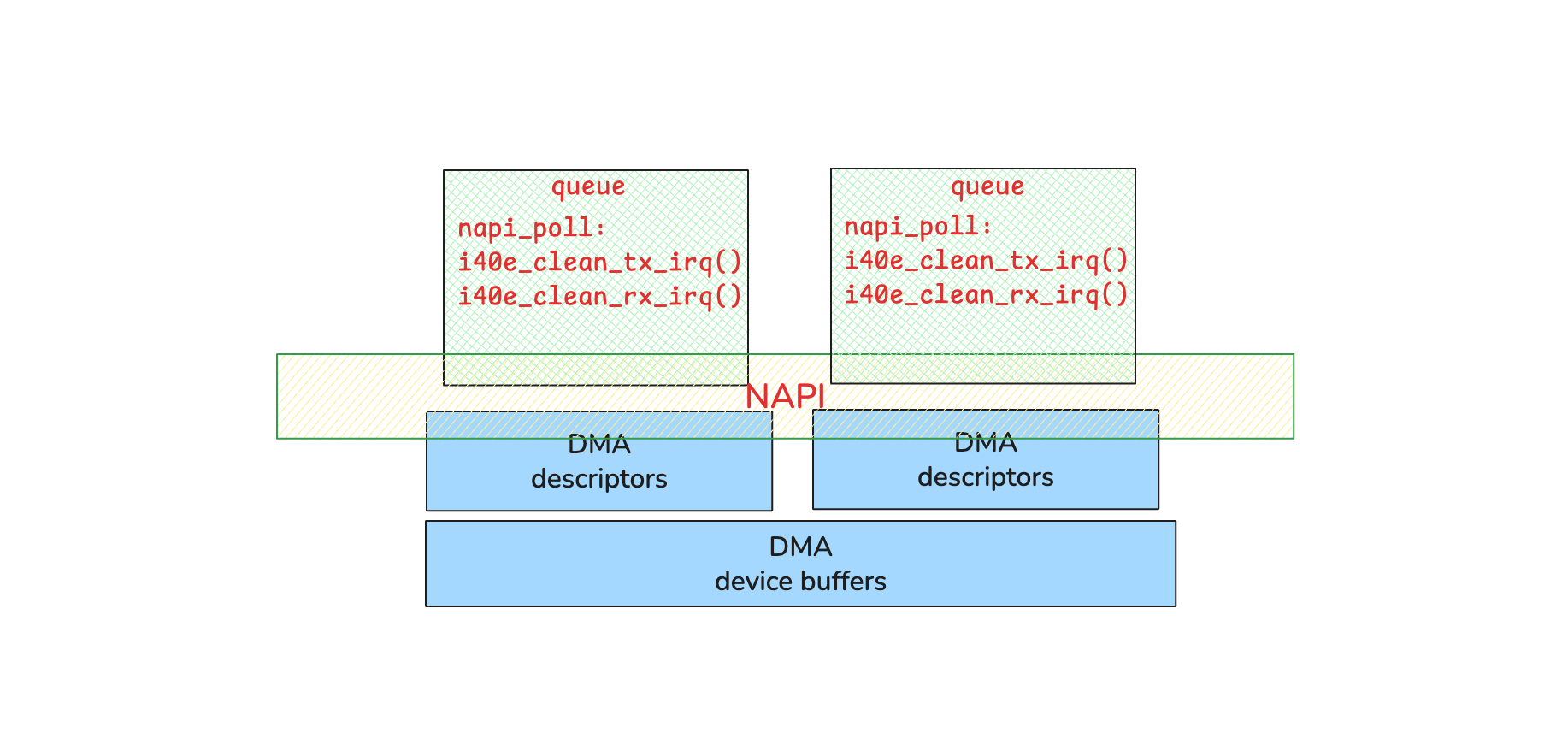

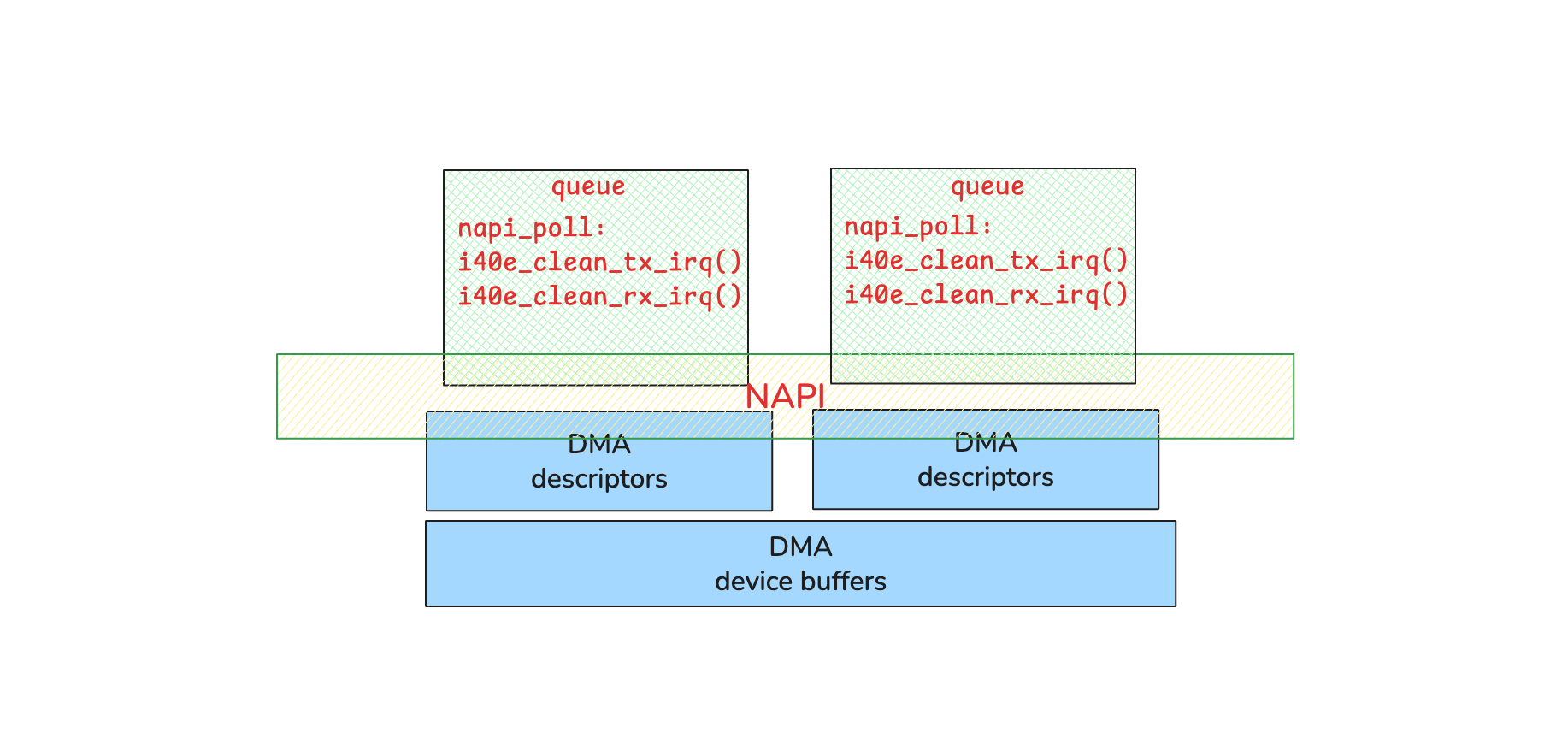

关注与 XDP 程序有关的收发包主流程:

如上图,每个 queue 都会设置一个 NAPI task 用来收发包。

在 NAPI task 里,调用 i40e_napi_poll() 函数来收发包:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

// drivers/net/ethernet/intel/i40e/i40e_txrx.c

/**

* i40e_napi_poll - NAPI polling Rx/Tx cleanup routine

* @napi: napi struct with our devices info in it

* @budget: amount of work driver is allowed to do this pass, in packets

*

* This function will clean all queues associated with a q_vector.

*

* Returns the amount of work done

**/

int i40e_napi_poll(struct napi_struct *napi, int budget)

{

// ...

/* Since the actual Tx work is minimal, we can give the Tx a larger

* budget and be more aggressive about cleaning up the Tx descriptors.

*/

i40e_for_each_ring(ring, q_vector->tx) {

bool wd = ring->xsk_pool ?

i40e_clean_xdp_tx_irq(vsi, ring) :

i40e_clean_tx_irq(vsi, ring, budget);

if (!wd) {

clean_complete = false;

continue;

}

arm_wb |= ring->arm_wb;

ring->arm_wb = false;

}

// ...

i40e_for_each_ring(ring, q_vector->rx) {

int cleaned = ring->xsk_pool ?

i40e_clean_rx_irq_zc(ring, budget_per_ring) :

i40e_clean_rx_irq(ring, budget_per_ring);

work_done += cleaned;

/* if we clean as many as budgeted, we must not be done */

if (cleaned >= budget_per_ring)

clean_complete = false;

}

// ...

}

|

其中:

i40e_for_each_ring(ring, q_vector->tx) 会遍历 xdp_rings 和 tx_rings。i40e_clean_tx_irq() 处理 xdp_rings 和 tx_rings 的发包后半段逻辑。i40e_for_each_ring(ring, q_vector->rx) 会遍历 rx_rings。i40e_clean_rx_irq() 处理 rx_rings 收包逻辑。

{xdp,tx,rx}_rings 在收发包时都通过一下字段来管理 descriptors:

ring->next_to_clean: 下一次清理 desc 的索引,在 i40e_clean_tx_irq() 里更新该字段。ring->next_to_use: 下一个可用的 desc 的索引,在 i40e_clean_rx_irq() 里更新该字段。DMA [descriptors|head]: 网卡固件会更新 head 字段,然后在 i40e_clean_tx_irq() 时读取该字段。

对于 xdp_rings:

- 复用

i40e_clean_tx_irq() 来处理发包后半段逻辑。

- 在

i40e_clean_rx_irq() 里运行 native 模式的 XDP 程序。

当 XDP 程序返回 XDP_TX 时,调 i40e_xmit_xdp_ring():

- 检查是否还有可用的 desc:

I40E_DESC_UNUSED(xdp_ring);如果没有,则 xdp_ring->tx_stats.tx_busy++。

- 将包塞到设备的 DMA 缓冲区里。

- 更新

xdp_ring->next_to_use。

问题确认

看下 i40e_xmit_xdp_ring() 源代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

// drivers/net/ethernet/intel/i40e/i40e_type.h

#define I40E_DESC_UNUSED(R) \

((((R)->next_to_clean > (R)->next_to_use) ? 0 : (R)->count) + \

(R)->next_to_clean - (R)->next_to_use - 1)

// drivers/net/ethernet/intel/i40e/i40e_txrx.c

/**

* i40e_xmit_xdp_ring - transmits an XDP buffer to an XDP Tx ring

* @xdpf: data to transmit

* @xdp_ring: XDP Tx ring

**/

static int i40e_xmit_xdp_ring(struct xdp_frame *xdpf,

struct i40e_ring *xdp_ring)

{

// ...

if (!unlikely(I40E_DESC_UNUSED(xdp_ring))) {

xdp_ring->tx_stats.tx_busy++;

return I40E_XDP_CONSUMED;

}

dma = dma_map_single(xdp_ring->dev, data, size, DMA_TO_DEVICE);

if (dma_mapping_error(xdp_ring->dev, dma))

return I40E_XDP_CONSUMED;

tx_bi = &xdp_ring->tx_bi[i];

// ...

tx_desc = I40E_TX_DESC(xdp_ring, i);

// ...

xdp_ring->xdp_tx_active++;

i++;

if (i == xdp_ring->count)

i = 0;

tx_bi->next_to_watch = tx_desc;

xdp_ring->next_to_use = i;

return I40E_XDP_TX;

}

|

使用 bpfsnoop 动态追踪 i40e_xmit_xdp_ring() 函数:

1

2

3

4

5

6

7

8

|

$ sudo ./bpfsnoop -k i40e_xmit_xdp_ring \

--filter-arg '(*(unsigned short *)(xdpf->data + 14 + 4 + 20 + 50 + 2)) == 0xfcff' \

--output-arg 'xdp_ring->next_to_clean' \

--output-arg 'xdp_ring->next_to_use' \

--output-arg 'xdp_ring->count'

i40e_xmit_xdp_ring args=((struct xdp_frame *)xdpf=0xffff975041bb5800, (struct i40e_ring *)xdp_ring=0xffff975efec1f980) retval=(int)1 cpu=0 process=(0:swapper/0)

Arg attrs: (u16)'xdp_ring->next_to_clean'=0x0/0, (u16)'xdp_ring->next_to_use'=0xfff/4095, (u16)'xdp_ring->count'=0x1000/4096

|

- 过滤特定网络包。

- 输出

next_to_clean、next_to_use 和 count。

结合返回值 1 (#define I40E_XDP_CONSUMED BIT(0))和 ethtool -S ens1f0 | grep tx_busy,确认确实没有可用的 desc 了。

问题特征:

xdp_ring->next_to_clean 一直是 0。xdp_ring->next_to_use 增长到 4095 就没有重新从 0 开始计算。

问题排查

看下 i40e_clean_tx_irq() 源代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

|

// drivers/net/ethernet/intel/i40e/i40e_txrx.c

/**

* i40e_clean_tx_irq - Reclaim resources after transmit completes

* @vsi: the VSI we care about

* @tx_ring: Tx ring to clean

* @napi_budget: Used to determine if we are in netpoll

*

* Returns true if there's any budget left (e.g. the clean is finished)

**/

static bool i40e_clean_tx_irq(struct i40e_vsi *vsi,

struct i40e_ring *tx_ring, int napi_budget)

{

// ...

tx_buf = &tx_ring->tx_bi[i];

tx_desc = I40E_TX_DESC(tx_ring, i);

i -= tx_ring->count;

tx_head = I40E_TX_DESC(tx_ring, i40e_get_head(tx_ring));

do {

struct i40e_tx_desc *eop_desc = tx_buf->next_to_watch;

/* if next_to_watch is not set then there is no work pending */

if (!eop_desc)

break;

/* prevent any other reads prior to eop_desc */

smp_rmb();

i40e_trace(clean_tx_irq, tx_ring, tx_desc, tx_buf);

/* we have caught up to head, no work left to do */

if (tx_head == tx_desc)

break;

/* clear next_to_watch to prevent false hangs */

tx_buf->next_to_watch = NULL;

/* update the statistics for this packet */

total_bytes += tx_buf->bytecount;

total_packets += tx_buf->gso_segs;

/* free the skb/XDP data */

if (ring_is_xdp(tx_ring))

xdp_return_frame(tx_buf->xdpf);

else

napi_consume_skb(tx_buf->skb, napi_budget);

// ...

/* move us one more past the eop_desc for start of next pkt */

tx_buf++;

tx_desc++;

i++;

if (unlikely(!i)) {

i -= tx_ring->count;

tx_buf = tx_ring->tx_bi;

tx_desc = I40E_TX_DESC(tx_ring, 0);

}

prefetch(tx_desc);

/* update budget accounting */

budget--;

} while (likely(budget));

i += tx_ring->count;

tx_ring->next_to_clean = i;

i40e_update_tx_stats(tx_ring, total_packets, total_bytes);

i40e_arm_wb(tx_ring, vsi, budget);

if (ring_is_xdp(tx_ring))

return !!budget;

// ...

}

|

根据前面确认的问题现象,意味着 i40e_clean_tx_irq() 处理 xdp_rings 时并没有更新 next_to_clean。

从上方代码片段可以判断 do {} while(); 循环被 break 了;但,是哪个 break 呢?

留意到 2 个 break 之间有 i40e_trace(clean_tx_irq, tx_ring, tx_desc, tx_buf);。

用 bpfsnoop 确认一下:

1

2

3

|

$ sudo ./bpfsnoop -t i40e_clean_tx_irq --show-func-proto

Kernel tracepoints: (total 1)

void i40e_clean_tx_irq(struct i40e_ring *ring, struct i40e_tx_desc *desc, struct i40e_tx_buffer *buf);

|

这是一个 tracepoint。

因此,在发一个包时:如果该 tracepoint 被触发,就不是 !eop_desc 导致的,而是 tx_head == tx_desc 导致的。

1

2

3

4

5

6

7

8

9

|

$ sudo ./bpfsnoop -t 'i40e_clean_tx_irq' \

--filter-arg '(ring->flags&0x4) != 0 && buf->xdpf != NULL && (*(unsigned short *)(buf->xdpf->data + 14 + 4 + 20 + 50 + 2)) == 0xfcff' \

--output-arg 'ring->next_to_clean' \

--output-arg 'ring->next_to_use' \

--output-arg 'ring->count' \

--output-arg 'buf->xdpf'

i40e_clean_tx_irq[tp] args=((struct i40e_ring *)ring=0xffff975efec1f980, (struct i40e_tx_desc *)desc=0xffff974f5f900000, (struct i40e_tx_buffer *)buf=0xffff974f71cc0000) cpu=0 process=(0:swapper/0)

Arg attrs: (u16)'ring->next_to_clean'=0x0/0, (u16)'ring->next_to_use'=0xfff/4095, (u16)'ring->count'=0x1000/4096, (struct xdp_frame *)'buf->xdpf'=0xffff974fd8336000

|

因此,确认是 tx_head == tx_desc 导致的。

看下 tx_head 是怎么获取的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

tx_head = I40E_TX_DESC(tx_ring, i40e_get_head(tx_ring));

// drivers/net/ethernet/intel/i40e/i40e_txrx.h

/**

* i40e_get_head - Retrieve head from head writeback

* @tx_ring: tx ring to fetch head of

*

* Returns value of Tx ring head based on value stored

* in head write-back location

**/

static inline u32 i40e_get_head(struct i40e_ring *tx_ring)

{

void *head = (struct i40e_tx_desc *)tx_ring->desc + tx_ring->count;

return le32_to_cpu(*(volatile __le32 *)head);

}

|

按照 i40e_get_head() 的方式读取一下 head:

1

2

3

4

5

6

7

8

9

10

|

$ sudo ./bpfsnoop -t 'i40e_clean_tx_irq' \

--filter-arg '(ring->flags&0x4) != 0 && buf->xdpf != NULL && (*(unsigned short *)(buf->xdpf->data + 14 + 4 + 20 + 50 + 2)) == 0xfcff' \

--output-arg 'ring->next_to_clean' \

--output-arg 'ring->next_to_use' \

--output-arg 'ring->count' \

--output-arg 'buf->xdpf' \

--output-arg 'le32(ring->desc + 65536)'

i40e_clean_tx_irq[tp] args=((struct i40e_ring *)ring=0xffff975efec1f980, (struct i40e_tx_desc *)desc=0xffff974f5f900000, (struct i40e_tx_buffer *)buf=0xffff974f71cc0000) cpu=0 process=(0:swapper/0)

Arg attrs: (u16)'ring->next_to_clean'=0x0/0, (u16)'ring->next_to_use'=0xfff/4095, (u16)'ring->count'=0x1000/4096, (struct xdp_frame *)'buf->xdpf'=0xffff974fd8336000, (void *)'le32(ring->desc + 65536, 4)'=0x0/0

|

其中,65536 是 16 (sizeof desc)乘以 4096 (ring->count)。

啊哈,读取出来的 head 一直是 0。

这就是问题所在了。

如果要进一步调试,就需要调试网卡固件了;无能为力,就这样吧。

总结

bpfsnoop 在支持复杂的 C 表达式来过滤和输出函数参数后,便能用来排查更加复杂的内核问题了,即使是驱动问题。